Multi View 3D Object Detection Network for Autonomous Driving

Proposal of sensor fusion framework that takes lidar point cloud and RGB images as input and predicts 3D bounding boxes. Laser Scanners capture the depth information. Cameras capture much more semantic information. Lidar based methods achieve more accurate 3D locations while image based higher accuracy in 2D box evaluation.

- Encoding the sparse 3D point cloud with compact multi view representation.

- Generate the 3D candidate boxes efficiently from bird’s eye view representation of 3D point cloud.

- Designed deep fusion scheme to combine features from multiple views.

- 25 % average precison for 3d localization and 30% average precision for 3D detection.

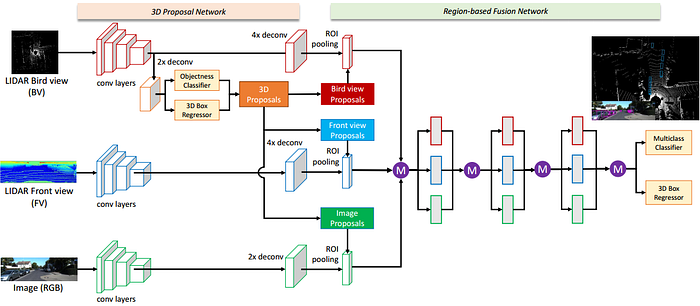

- MV3D detection network consists of 2 parts:-

1. 3D proposal network

- Utilizes bird eye view for representation of point cloud to generate 3D bounding boxes.

2. Region based fusion network.

In MV3D :-

Input → Lidar Bird Eye View, Lidar Front View, Image(RGB)

Proposals Generated On → Lidar Bird Eye View

Projection of proposals into 3 views for bird eye view, Front View of lidar, Image. Later passed through the nueral network.

h